Running an LLM Locally Using Ollama

In the evolving world of AI, Large Language Models (LLMs) like GPT and BERT have become pivotal. However, accessing these models typically requires cloud services. But you can run some of these LLMs locally, right from your own hardware, assuming you have adequate system resources.

Running Ollama using Docker

Ollama is a command-line chatbot that simplifies the use of large language models, which offers a range of open-source models like Mistral, Llama 2, Code Llama, and more, catering to different requirements (see Ollama on GitHub for minimum system requirements).

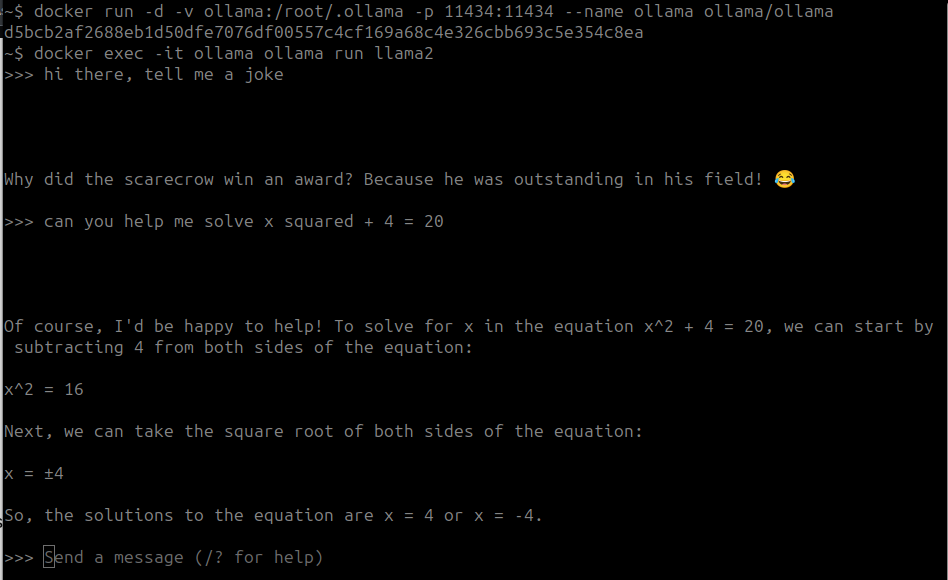

If, like me, you already have docker installed and want to avoid installing more stuff, you can just use docker (see Ollama on Docker Hub):

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

# then run the LLM

docker exec -it ollama ollama run llama2

Here is that chatbot telling me a joke and helping me solve an equation:

There are a number of other LLMs you can run as listed at Ollama Library, e.g.:

docker exec -it ollama ollama run mistral

You can also invoke this via an API call:

curl -X POST http://localhost:11434/api/generate -d '{

"model": "mistral",

"prompt":"what is python?"

}'

This approach with Ollama and Docker opens up new possibilities for utilizing LLMs directly from personal devices, ensuring both accessibility and privacy for users.